About Us

Who We Are

The Mitchell C. Hill Center for Digital Innovation is a student-powered learning and research center within the College of Business Administration at Cal Poly Pomona. Established in 2018 through a generous endowment from Avanade, the Center honors the legacy of Mitchell C. Hill, a 1980 CPP alumnus and founding CEO of Avanade.

Our mission is to cultivate innovation, inclusion, and excellence through student-led experiences in digital technologies—especially in areas like artificial intelligence, cybersecurity, infrastructure management, and global collaboration.

Our Mission

“To provide a unique, student-run, high-technology center where great ideas, diversity, and learning through experimentation are highly valued. We provide competition support to the cyber education community, research support to faculty, and exciting project opportunities for students. We engage industry partners in experimental initiatives that prepare students for the digital workforce.”

👉 Learn more about the endowment and how you can support future digital leaders »

Our Goals

-

Support educational outreach activities that promote and honor the living memory of Mitch Hill.

-

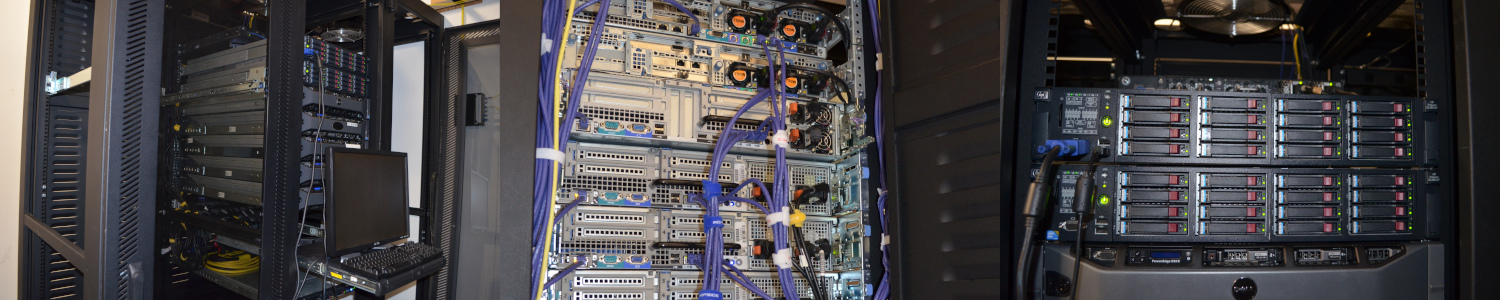

Maintain and update the Mitchell C. Hill Student-run Data Center (SDC) infrastructure to support the goals of the center.

-

Foster Student-Led Innovation and Experimentation. Encourage students to take the lead on experimental digital projects, promoting a hands-on learning culture where trial, failure, and creative problem-solving are part of the process.

-

Advance Experiential Learning Through Competitive Learning. Support and organize participation in competitions, hackathons, and digital challenges that enhance skills in emerging technologies.

-

Enable Research Collaboration in Digital Technologies. Provide faculty with support and opportunities for collaboration in research projects that explore cutting-edge technologies, including AI, digital twins, cybersecurity, IoT, and data science.

-

Promote a Supportive Environment for All Students. Create an inclusive environment that values diverse perspectives in digital innovation, ensuring all students—regardless of background—can contribute to and benefit from the center’s initiatives.

-

Develop Industry Partnerships for Real-World Projects. Engage industry partners to co-develop experimental digital initiatives, giving students exposure to real-world challenges and fostering innovation pipelines between academia and industry